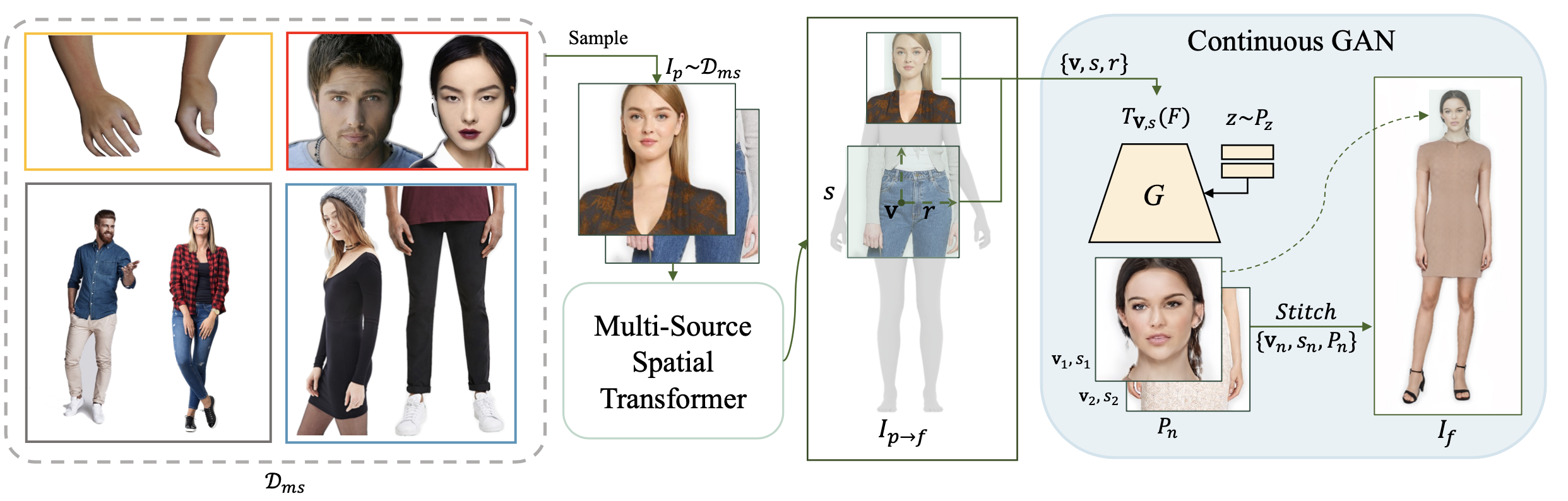

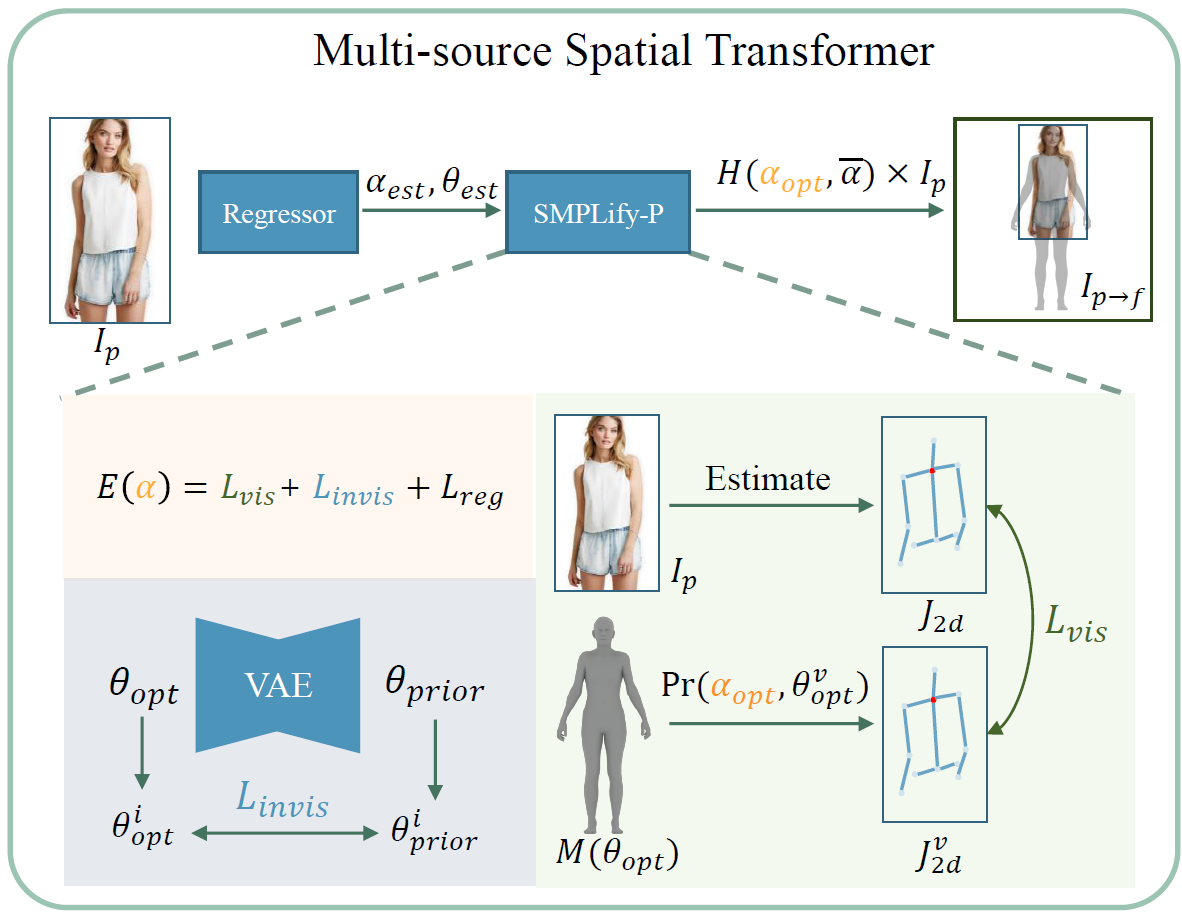

Human generation has achieved significant progress. Nonetheless, existing methods still struggle to synthesize specific regions such as faces and hands. We argue that the main reason is rooted in the training data. A holistic human dataset inevitably has insufficient and low-resolution information on local parts. Therefore, we propose to use multi-source datasets with various resolution images to jointly learn a high-resolution human generative model. However, multi-source data inherently a) contains different parts that do not spatially align into a coherent human, and b) comes with different scales. To tackle these challenges, we propose an end-to-end framework, UnitedHuman, that empowers continuous GAN with the ability to effectively utilize multi-source data for high-resolution human generation. Specifically, 1) we design a Multi-Source Spatial Transformer that spatially aligns multi-source images to full-body space with a human parametric model. 2) Next, a continuous GAN is proposed with global-structural guidance. Patches from different datasets are then sampled and transformed to supervise the training of this scale-invariant generative model. Extensive experiments demonstrate that our model jointly learned from multi-source data achieves superior quality than those learned from a holistic dataset.

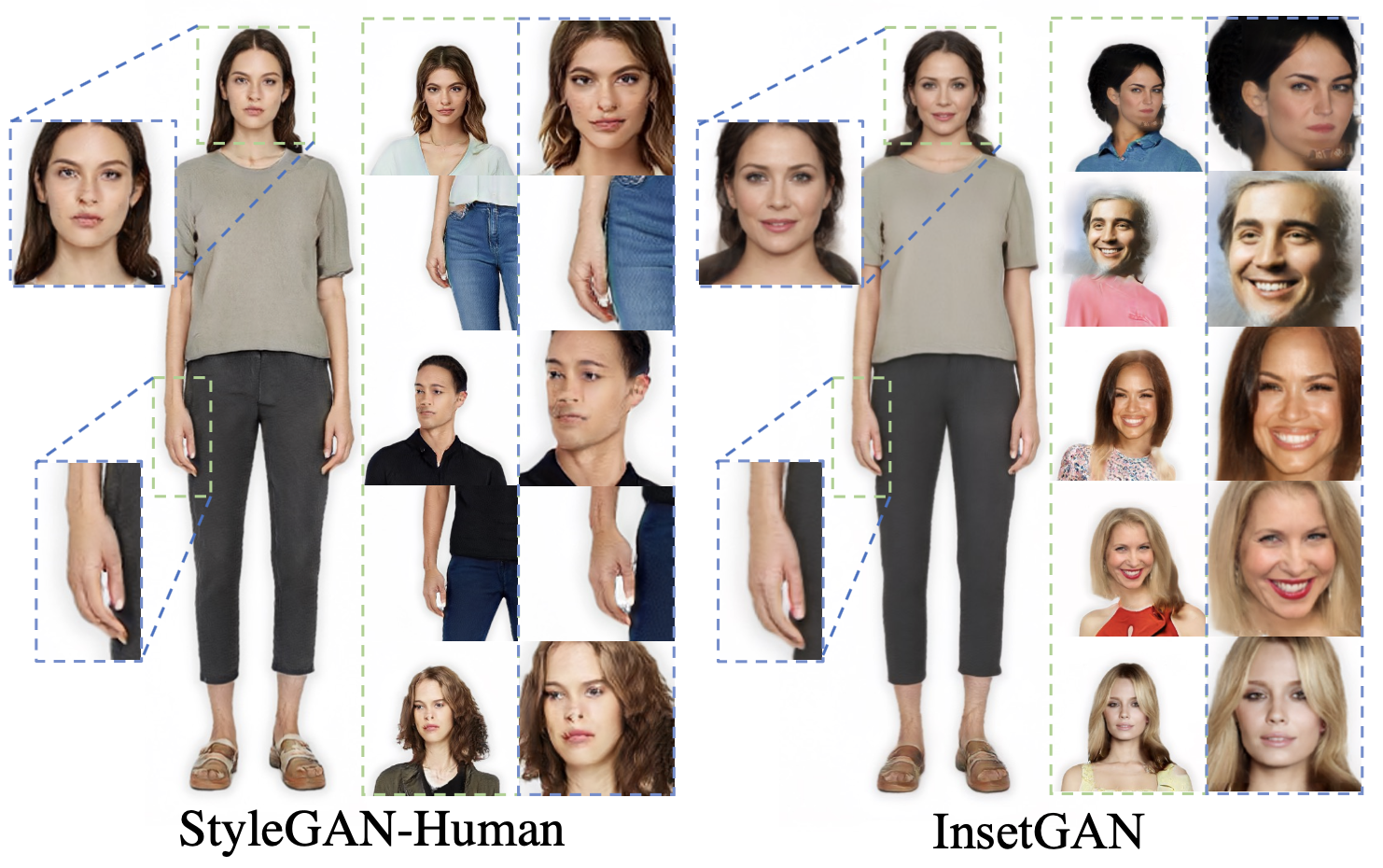

Here are the comparison results of StyleGAN-Human, InsetGAN, AnyRes, and UnitedHuman. We exhibit the full-body human images generated from each experiment at a resolution of 1024 (), as well as the face and hand patches cut from the 2048px images ().

Here are more results generated by the proposed method.

One of the interpolation results between two latent codes.

StyleGAN-Human proposes SHHQ full-body human datasets.

Text2Human proposes a text-driven controllable human image synthesis framework.

@article{fu2023unitedhuman,

title={UnitedHuman: Harnessing Multi-Source Data for High-Resolution Human Generation},

author={Fu, Jianglin and Li, Shikai and Jiang, Yuming and Lin, Kwan-Yee and Wu, Wayne and Liu, Ziwei},

journal = {arXiv preprint},

volume = {arXiv:2309.14335},

year = {2023}

}